Why should you care about low GPU usage?

Although the costs of computing and storage have dropped substantially in the past decade, it is still important to maximize GPU utilization and minimize unnecessary data copies. Both waste money and precious time, so crucial when there is a constant need to minimize time-to-market.

For teams working on smaller datasets, these requirements can be addressed without much code overhead. However, for bigger, distributed teams working on terabyte-scale datasets, these requirements represent a complex multidisciplinary engineering challenge. Simply put, to maximize GPU utilization, datasets have to be fully downloaded to a local machine before a single epoch can be run.

This case study is written for those in the second camp. By combining SageMaker with Hub, it demonstrates how teams can get the significant benefits of Amazon’s cloud infrastructure without running into IO issues or sacrificing GPU utilization. GPU utilization (or GPU usage) is one of the primary system metrics to observe during training runs. In an ideal case, your program should have high GPU utilization, minimal CPU (the host) to GPU (the device) communication, and no overhead from the input pipeline.

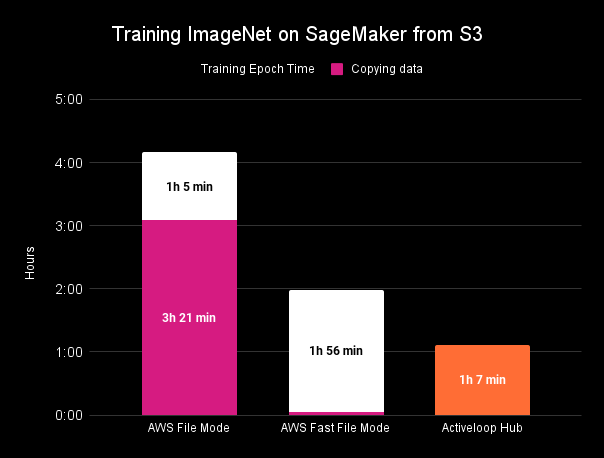

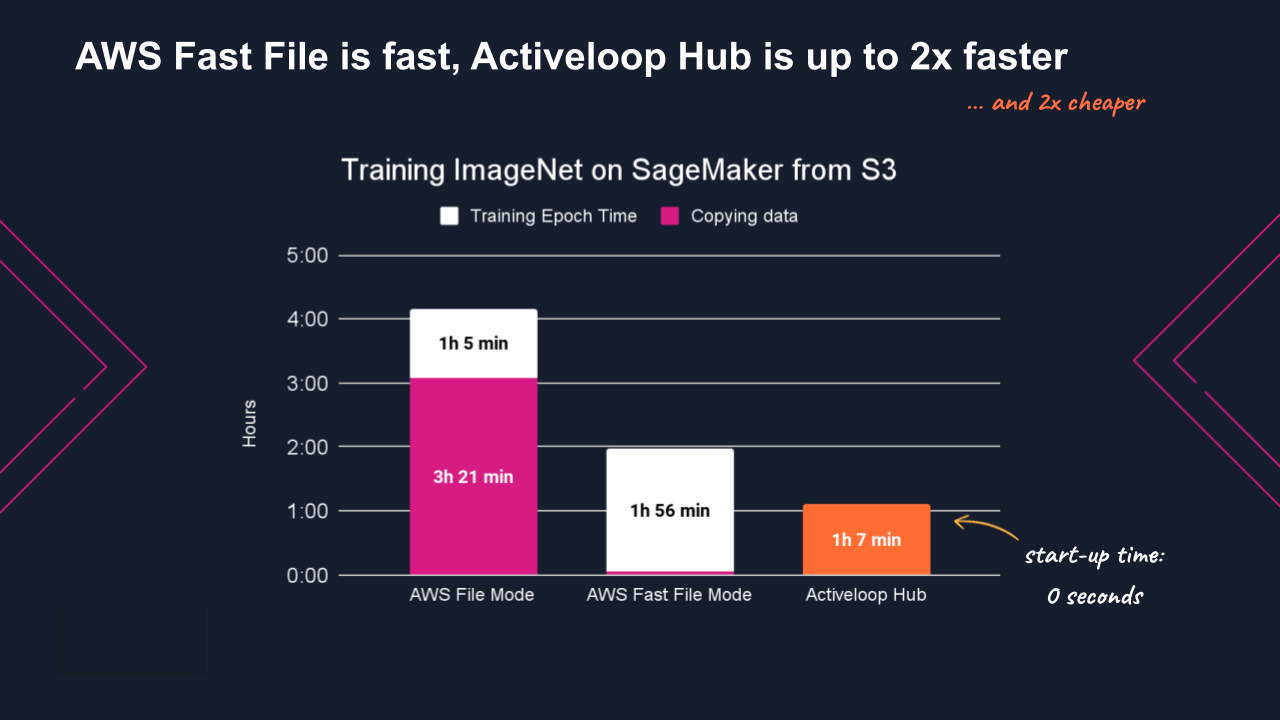

In addition, with Hub, AWS SageMaker users are able to start training right away, without having to copy their data. Instead, data scientists can stream their computer vision datasets directly to SageMaker with very little effort, and achieve up to 2x faster and cheaper machine learning cycles as compared to using in-built SageMaker tools.

Cloud object stores are not optimized for machine learning

While modern cloud object stores are extremely reliable and performant, they are not optimized for machine learning datasets, which can easily top terabytes and have unique read patterns (random batch access in a sequential read).

Reading data from buckets, a few objects at a time, takes considerable time. Anyone who has worked with terabyte scale datasets knows that dense, numerical data feels heavy. Pushing data over unreliable wires can feel like squeezing ketchup out of a bottle. For industrial ML, where it’s not unusual to run tens of thousands of epochs, that drag can significantly extend iteration cycles.

As a result, practitioners face a difficult dilemma. They can store data onto cloud object stores and be IO-bound. Or they can keep data local and incur the overhead cost of creating and maintaining datasets across multiple machines.

Since ML datasets can easily reach terabyte-scale, neither option is sustainable for enterprise workloads.

How to fix low GPU usage with Activeloop Hub

Hub is designed to break this dilemma. Hub enables you to store data in the cloud and lets you train your models without having to spend many hours downloading the data.

Hub, in a nutshell, formats your datasets for deep learning projects (tensors are the main primitives in Hub). It also fetches data from a remote source, applies transformations, creates batches and sends it to a local GPU.

By storing unstructured data in Hub format (chunked, compressed arrays on the cloud), practitioners can get the best of both worlds. They can store arbitrarily large datasets on cloud object stores, thus benefiting from infrastructure scale, code maintainability and data accessibility. They can also access data with high throughput (even with S3’s cap of 5,000 requests per second), thus maintaining high GPU utilization.

Installation

Hub can be installed with pip. Alternatively, you can download it from the source.

For this case study, you will need Hub v 2.0 and higher, and SageMaker’s PyTorch SDK. This will let us easily run training jobs on SageMaker infrastructure.

1pip install hub

2

Creating Hub Datasets

In this section, we will download a dataset, store it in Hub format and push it to Amazon’s S3.

Getting Started with Activeloop Hub

Written in Python, Hub is designed to look and feel like other popular PyData packages.

Hub can be installed with pip, with few dependencies. It relies on common data structures (e.g. Numpy arrays), algorithms (e.g., jpeg for image compression) and libraries (e.g., boto for S3). Syntactically, it is inspired by the popular hdf5. Finally, it plays well with contemporary ML frameworks such as PyTorch and TensorFlow.

In fact, if you’ve used TF Data or hdf5 in the past, you will recognize many familiar elements in Hub’s design principles. And if not, then there are plenty of tutorials, notebooks, references and documentation, as well as an active community.

Stanford’s Cars dataset: storing computer vision data in Hub format

We will use Stanford’s Cars dataset. It is a small dataset of 16K images of 196 classes. Although we are using a tiny segmentation dataset, we could have used much larger (perhaps petascale) and varied data types (not just images but audio or text). So while storing a dataset of this size on S3 is overkill, it will highlight the fundamental steps for working with larger datasets.

We will create an “empty” Hub dataset with the appropriate destination. It is empty in the sense that it contains meta information (where to store data, what kind of compression algorithm should be used) about the dataset.

1ds = hub.empty("s3://bucket/dataset/cars", overwrite=True)

2

With the empty dataset in hand, we can populate it with the Cars dataset. Although data in Hub format can be stored as-is, we could also apply off-the-shelf compression to achieve better performance (should go without saying: you should use the same algorithm for compression and decompression).

1with ds:

2 #Create tensors

3 ds.create_tensor("images", htype = "image", sample_compression = 'jpeg') # Create tensor

4 ds.create_tensor("labels", htype = "class_label", class_names = class_names) # Create tensor

5

6 # Add metadata

7 ds.info.update(description='My Favourite Cars')

8 ds.images.info.update(camera='SLR')

9

10 for fn in tqdm(fns, total=len(fns)):

11

12 label_text = os.path.basename(os.path.dirname(fn))

13 label = class_names.index(label_text)

14

15 #Append data

16 ds.images.append(hub.read(fn)) # Append to images tensor

17 ds.labels.append(np.uint32(label)) # Append to labels tensor

18

Finally, a Hub dataset contains valuable information about the dataset. If we wanted to peek under the hood, we can do so:

1ds.info

2ds.images.info

3ds.labels.info.class_names

4

Pushing Hub datasets to remote

After we’ve populated our Hub dataset, it will be automatically pushed to the specified destination. There are no additional steps required: there is no need to pickle, manage connection pools, compress data, create boto clients or SCP data.

In this example, we will push our dataset to Hub’s Platform, a storage service free for individuals. It is maintained by Activeloop, the company behind Hub, and includes visualization tools and permissioning features.

In order to use the free storage service of up to 300 GBs, we can log in from the command line:

1activeloop register

2activeloop login -u username -p password

3

Of course, we could have used a private S3 and passed the appropriate credentials to Hub:

1creds = {'aws_access_key_id': 'abc', 'aws_secret_access_key': 'xyz', 'aws_session_token': '123'}

2

3hub.load('s3://...', creds=creds)

4

This setup would require more configuration since we would have to deal with security, network, access tokens, etc.

Training the Model with SageMaker

What is Amazon Sagemaker?

Amazon SageMaker is a managed service as a part of the extensive toolkit of the Amazon Web Services (AWS). It comprises tools to build, train and deploy machine learning models, as well as manage them at scale. Essentially, Amazon SageMaker is a machine learning platform built to greatly reduce training time with optimized infrastructure. With Hub, the benefits of SageMaker are more pronounced thanks to the added simplicity in streaming datasets to SageMaker, as well as the boost in the total training time required (with up to 2x faster model training as compared to AWS SageMaker’s in-built solutions), as we presented on AWS ML workshop earlier last month.

In this section, we will accomplish three things.

First, we define our training script. There is nothing fancy here: just a simple pretrained ResNet-50 model. Its purpose is to consume data from Hub and, if Hub works as advertised, do so in a way that fixes low GPU usage (and maximizes it).

Then we instantiate a Sagemaker object. It is responsible for spinning up and provisioning cloud infrastructure, among other things. Rather than uploading data from a local machine or pointing SageMaker to a S3 bucket, we specify SageMaker to fetch from a unique Hub location.

Finally, we launch the training job and examine GPU usage. By the way, there are several ways to monitor GPU utilization. In addition to nvidia-smi, there are fancier tools like Weights and Biases, which automatically logs system metrics. Whatever the case, SageMaker reads from Hub and, as we will see, a Hub dataset must prefetch “just enough” data in order to maximize GPU performance. This, of course, is easier said than done, since there are several constraints (e.g., S3 throttling, decompression) that needs to be addressed.

Defining Training script

We use the popular Resnet-50 model from PyTorch. For this case study, it doesn’t matter which model or framework we use as long as it can perform matrix multiplication on GPUs.

1from torchvision import models

2net = models.resnet50(pretrained=True)

3

We may also want to transform data before it is consumed by our model, which will be handled by the function _get_train_data_loader.

1def _get_train_data_loader(hub_path, train_batch_size, num_workers):

2 logger.info("Get train data loader")

3

4 tform = transforms.Compose([

5 transforms.ToPILImage(),

6 transforms.Resize((224, 224)),

7 transforms.ToTensor(),

8 transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5]),

9 ])

10

11def transform(x):

12 return {'images': tform(x['images']), 'labels': x['labels']}

13

14ds = hub.load(hub_path)

15

16return ds.pytorch(batch_size = train_batch_size, transform = transform, num_workers = num_workers)

17

As for the training script, we follow the classic “training loop” pattern, which should be familiar to most: shunt data through the model to generate outputs (forward pass), use outputs to compute loss and gradients (compute gradients), and apply gradients to weights (parameter updates).

1def train(args):

2 is_distributed = len(args.hosts) > 1 and args.backend is not None

3 use_cuda = args.num_gpus > 0

4 logger.debug("Number of gpus available - {}".format(args.num_gpus))

5 device = torch.device("cuda" if use_cuda else "cpu")

6

7 if use_cuda:

8 torch.cuda.manual_seed(args.seed)

9

10 train_loader = _get_train_data_loader(args.hub_path, args.batch_size, args.num_workers)

11

12 logger.debug(

13 "Processes {}/{} ({:.0f}%) of train data".format(

14 len(train_loader.sampler),

15 len(train_loader.dataset),

16 100.0 * len(train_loader.sampler) / len(train_loader.dataset),

17 )

18)

19

20model = net.to(device)

21model = torch.nn.DataParallel(model)

22

23optimizer = optim.SGD(model.parameters(), lr=args.lr, momentum=args.momentum)

24criterion = nn.CrossEntropyLoss()

25

26for epoch in range(args.epochs): # loop over the dataset multiple times

27 model.train()

28 running_loss = 0.0

29 start_time = time.time()

30 for i, data in enumerate(train_loader):

31 # get the inputs; data is a list of [inputs, labels]

32 inputs = data['images']

33 labels = data['labels']

34

35 inputs = inputs.to(device)

36 labels = labels.to(device)

37

38 # zero the parameter gradients

39 optimizer.zero_grad()

40

41 # forward + backward + optimize

42 outputs = model(inputs.float())

43 loss = criterion(outputs, labels)

44 loss.backward()

45 optimizer.step()

46

47 # print statistics

48 running_loss += loss.item()

49 if i % 10 == 0: # print every 10 mini-batches

50 batch_time = time.time()

51 speed = (i+1)/(batch_time-start_time)

52 logger.info('[%d, %5d] loss: %.3f, speed: %.2f ' %

53 (epoch + 1, i, running_loss, speed))

54 running_loss = 0.0

55

56print('Finished Training')

57

Instantiating AWS SageMaker

We need the proper IAM setup before we work with SageMaker. This ensures that, by setting an IAM role (referred to as an execution role), SageMaker is able to perform the following operations.

1import sagemaker

2sess = sagemaker.Session()

3role = "SageMakerRole"

4

We can then instantiate a Sagemaker object called est. We configure it with our training script, an IAM role, the number of training instances, the training instance type, and hyperparameters (a dict of values that will be passed to your training script).

Behind the seemingly simple API, this object does plenty of work behind the scenes: not only is it responsible for provisioning hardware, it is also responsible for launching pre-specified scripts, logging metrics and handling artifacts.

1from sagemaker.pytorch import PyTorch

2

3est = PyTorch(

4 entry_point="train.py",

5 source_dir="./train_code", # directory of your training script

6 role=role,

7 framework_version="1.8.0",

8 py_version="py3",

9 instance_type=instance_type,

10 instance_count=1,

11 hyperparameters={"hub-path": "s3://bucket/dataset/cars", "batch-size": 32, "epochs": 1,

12 "lr": 1e-3, "num-workers": 8, "log-interval": 100})

13

Entire tutorials can be written about SageMaker (and it has). For more information, you can go through documentation or official tutorials.

The train loop

Finally, we launch the job with:

1est.fit()

2

When called by SageMaker, Hub fetches data from source (e.g., local disk, remote object store or Hub Platform) and shunts it directly to the Resnet model, bypassing disk IO in the process. By streaming data directly to the model, we can launch training immediately without the entire dataset in hand.

Of course, that won’t mean much for small datasets. But for medium size datasets like ImageNet and larger, that could mean shaving off 40+ hours from experimentation time.

Maximizing GPU usage

Without any GPU optimization, it is not uncommon to see 10-30% GPU utilization during training. This underutilization of GPUs might be okay for smaller projects but it can be a major source of costs for larger experiments, where every cycle counts. There are multiple reasons for low GPU usage, which we will delve into in a future blogpost. With Hub, however, we’re solving one of the main reasons for low GPU usage, and are able to hit 90-95% GPU usage without much additional effort. Moreover, although we don’t have the entire dataset available, a Hub delivers “just enough” data at runtime to avoid GPU starvation.

As a result, we can store datasets on the cloud (and enjoy scalability and accessibility) without IO-bound or low GPU utilization.

Conclusion: use Hub to fix low GPU usage and 2x your SageMaker training

This case study demonstrated that we can take advantage of modern cloud infrastructure without sacrificing GPU performance.

First, we converted a dataset into Hub format, which effectively organizes chunked tensors over remote machines. We saw that data stored in Hub form also allows us to store large datasets, remotely or locally, with a few additional lines of code .

Then we instantiated a SageMaker PyTorch object with the appropriate hyperparameters. By following the template for SageMaker projects, we were able to launch and configure a P3 instance without much hassle.

Finally, we streamed Hub data from S3 to our virtual Sagemaker instance. Had we wanted to, we could have easily transformed incoming data (perhaps for data augmentation) before it is consumed by our model. Because Hub fetches “just enough” data at runtime to prevent GPU starvation, we could start training without the complete dataset.

Jayanth Anantha Raman, Vin Tang & Davit Buniatyan has contributed to this article.