Introduction

When selecting a Vector Database for production-grade Retrieval Augmented Generation (RAG) applications, two things matter most: speed and affordability. Deep Lake 3.7.1 introduces a unique and performant implementation of the HNSW Approximate Nearest Neighbor (ANN) search algorithm that improves the speed of index creation, reduces the RAM usage, and integrates Deep Lake’s Query Engine for fast filtering based on metadata, text, or other attributes. The new index implementation pushes the limit for sub-second vector search from 1 million to >35 million embeddings, while significantly reducing costs for running a vector database in production.

Scalability and Performance in Deep Lake 3.7.1

Deep Lake’s prior versions utilized a high-performance implementation of linear search for computing embedding similarity. While it is effective for smaller vector stores, this method is not suitable for vector stores exceeding 1 million embeddings. With the introduction of Deep Lake 3.7.1, we’ve added an advanced implementation of Approximate Nearest Neighbor (ANN) search, supercharging search speeds to under one second for 35 million embeddings. For smaller databases under 100,000 embeddings, linear search remains the preferred method due to maximum accuracy, while ANN search is recommended at larger scales.

Deep Lake’s HNSW Implementation

Hierarchical Navigable Small World (HNSW) graphs are among the best performing and reliable indexes for vector similarity search. Deep Lake has made the HNSW index even more powerful by adding enhancements such as intelligent memory utilization and multithreading during index creation. By distributing data in the Deep Lake Vector Store between object storage, attached storage (on-disk), and RAM, Deep Lake minimizes the usage of costly memory while maintaining high performance. This is a must-have for building RAG-based Large Language Model (LLM) applications at scale.

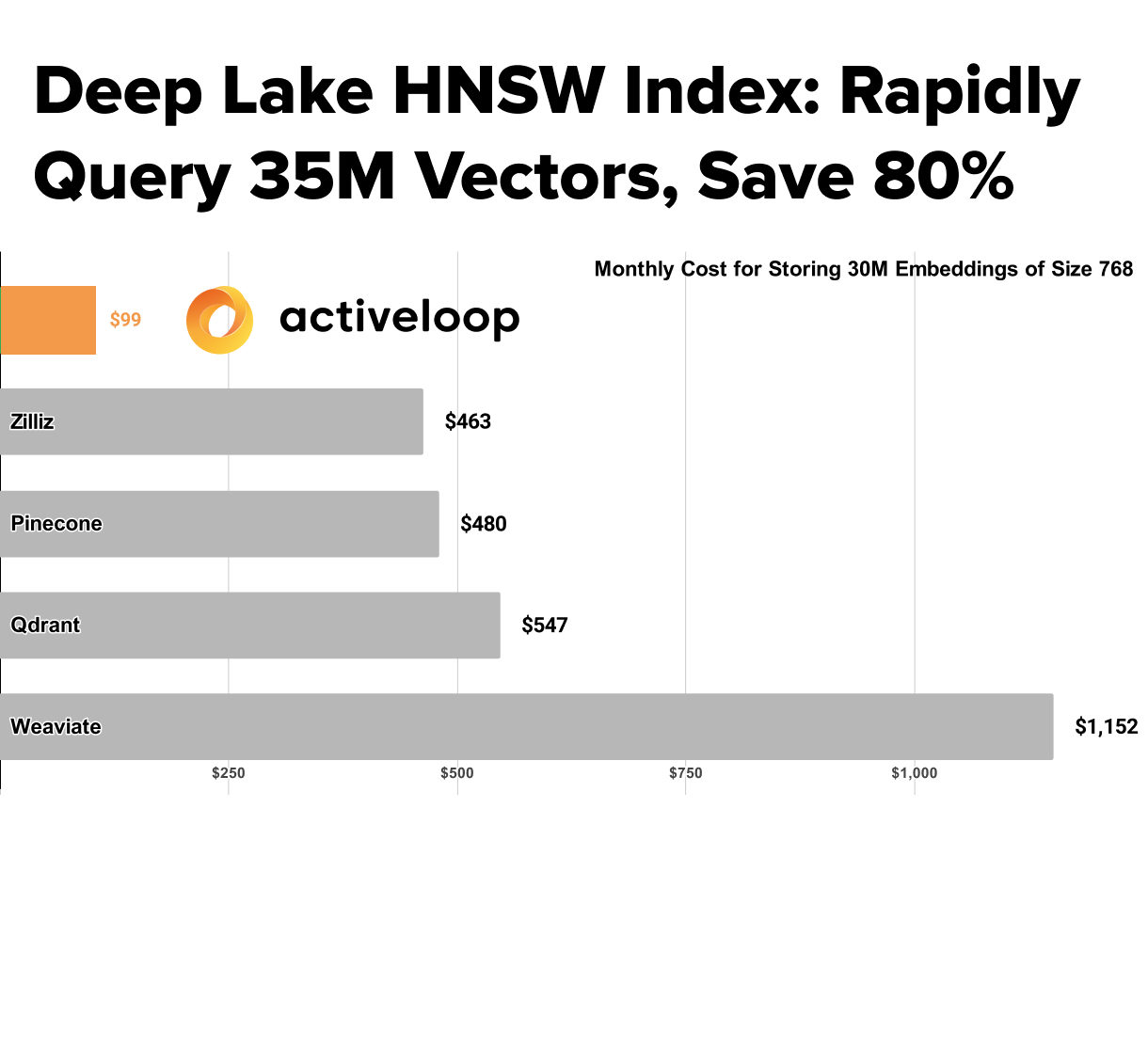

Top-tier Performance, Without the Hefty Price Tag, Unlike Other Vector Databases

Most vector databases were originally designed for applications such as recommendation engines, which require real-time search and millions of requests per day. As a result, they are typically implemented in-memory, relying heavily on RAM for data storage. Since LLM responses may take several seconds, implementing an in-memory real-time vector database is unnecessary, and it significantly increases costs without meaningfully improving the end-to-end user experience. Thanks to Deep Lake’s efficient memory architecture, we’ve slashed storage costs by over 80% compared to many leading competitors without sacrificing the performance of your LLM apps. Our lower costs combined with industry-leading ease of use offer customers a risk-free journey for scaling projects from prototyping to production.

Conclusion

As the world of Large Language Model (LLM) applications grows and matures, scalability without burning though your budget is paramount for taking your prototypes to production. Deep Lake stands out by delivering fast, sub-second vector search capabilities for datasets with up to 35 million embeddings, and at a cost that’s 80% more affordable than other vector databases in the market. Your search for a powerful, budget-friendly Vector Database solution ends with Deep Lake.

Try out Deep Lake Index today.